Distributed systems are collections of independent computers that function as a single, cohesive unit to solve complex problems․ They enable scalability, reliability, and efficient resource utilization, addressing challenges like handling thousands of users and performance issues․ By understanding distributed systems, developers can design efficient, fault-tolerant applications, crucial for modern software architectures․

What Are Distributed Systems?

Distributed systems are collections of independent computers that function as a single, cohesive system to solve complex problems․ They communicate over a network, sharing resources and coordinating tasks to achieve common goals․ These systems enable scalability, fault tolerance, and improved performance by distributing workloads across multiple machines․ Examples include cloud computing platforms, mobile apps, and large-scale enterprise applications․ Distributed systems are designed to operate seamlessly, making them indistinguishable from a single centralized system to end-users․

Importance of Distributed Systems

Distributed systems are crucial for modern computing, enabling scalability and fault tolerance across applications․ They allow resource sharing, improve performance, and handle large-scale data efficiently․ These systems underpin technologies like cloud computing, mobile apps, and real-time systems, ensuring reliability and consistency․ Their ability to manage failures and scale operations makes them essential for global enterprises and services, driving innovation and supporting the growing demands of digital infrastructure․

Key Challenges in Distributed Systems

Distributed systems face challenges like communication complexity, data consistency, scalability, and fault tolerance, requiring careful design and coordination to ensure reliability and performance․

Communication Complexity

Communication complexity is a significant challenge in distributed systems, arising from the need for nodes to exchange messages efficiently․ As systems scale, the overhead of message passing increases, leading to potential bottlenecks and delays․ Network latency and bandwidth limitations further complicate communication, requiring careful design to minimize overhead and ensure reliable data transmission․

Addressing communication complexity involves robust algorithms and protocols to manage data flow and synchronization․ These solutions are critical for maintaining system performance and ensuring that distributed systems operate efficiently despite the inherent challenges of communication in a decentralized environment․

Data Consistency Models

Data consistency models ensure that data remains accurate and uniform across a distributed system․ They define how systems handle updates and reads, balancing consistency, availability, and performance․ Strong consistency guarantees that all nodes have the same data, while eventual consistency allows temporary discrepancies․ These models are vital for managing trade-offs in distributed systems, ensuring predictable behavior and reliability, especially in failure scenarios․ Understanding these models helps developers design scalable and fault-tolerant systems effectively․

Scalability

Scalability is a critical aspect of distributed systems, enabling them to handle increasing workloads without performance degradation․ It involves adding resources, such as nodes or hardware, to manage growing demands․ Horizontal scaling (adding more nodes) and vertical scaling (increasing node power) are common strategies․ Distributed systems achieve scalability by distributing tasks across multiple machines, ensuring efficient resource utilization․ This capability is essential for modern applications, allowing systems to adapt to growth and maintain performance, ensuring reliability and responsiveness as user demands escalate․

Fault Tolerance

Fault tolerance is critical in distributed systems, ensuring they remain operational despite hardware or software failures․ By designing systems to detect and recover from failures, fault tolerance maintains service availability and reliability․ Distributed systems achieve this through replication and redundancy, where data and services are duplicated across multiple nodes․ This ensures that if one node fails, others can take over seamlessly․ Fault tolerance is essential for modern applications, where uninterrupted service is expected, and it directly impacts the system’s ability to handle failures without compromising performance or user experience․

Core Concepts of Distributed Systems

Distributed systems rely on key concepts like the network stack, CAP theorem, data replication, and consensus algorithms․ These principles ensure efficient communication, scalability, and data consistency across systems․

Network Stack

The network stack is a foundational component of distributed systems, enabling communication between nodes․ It comprises layers like physical, data link, network, transport, session, presentation, and application․ These layers handle data transmission, routing, and protocol management․ Understanding the network stack is crucial for designing efficient distributed systems, as it directly impacts performance, reliability, and scalability․ Proper configuration and optimization of the stack ensure seamless data exchange, which is essential for maintaining system consistency and responsiveness in distributed environments․

CAP Theorem

The CAP Theorem states that in a distributed system, it is impossible to simultaneously guarantee all three of the following: consistency, availability, and partition tolerance․ This fundamental principle, also known as the Brewer’s CAP Theorem, highlights the trade-offs designers must make․ Consistency ensures data accuracy across the system, availability guarantees access to data, and partition tolerance allows the system to function despite network failures․ By understanding these constraints, developers can design systems that balance these priorities effectively, ensuring reliability and performance in distributed environments․

Data Replication

Data replication is the process of maintaining multiple copies of data across different nodes in a distributed system to ensure availability and durability․ It enables systems to tolerate failures and improve performance by reducing latency․ Replication strategies vary, with options like synchronous and asynchronous updates․ Consistency models, such as eventual consistency, are often used to manage discrepancies between replicas․ Proper replication ensures data is always accessible, even during network partitions or node failures, making it a critical component for building scalable and fault-tolerant distributed systems․

Consensus Algorithms

Consensus algorithms are mechanisms that enable nodes in a distributed system to agree on a single value or state, ensuring system-wide consistency․ These algorithms, such as Raft or Paxos, are crucial for maintaining data integrity and system reliability․ They address challenges like network partitions and node failures, allowing the system to reach agreement despite potential failures․ By ensuring all nodes converge on the same state, consensus algorithms are fundamental to achieving fault tolerance and coordination in distributed systems, enabling them to operate reliably and efficiently in dynamic environments․

Practical Applications of Distributed Systems

Distributed systems power cloud computing, enabling scalable resource management․ They underpin microservices architectures, allowing modular and resilient application development․ Real-time systems rely on them for instant data processing․

Cloud Computing

Cloud computing relies on distributed systems to deliver scalable and on-demand computing resources over the internet․ It enables organizations to access vast computing power, storage, and services without owning physical infrastructure․ Distributed systems are the backbone of cloud platforms like AWS and Azure, ensuring high availability, fault tolerance, and resource optimization․ By leveraging distributed architectures, cloud providers can manage millions of servers, storage systems, and applications globally, offering services like SaaS, PaaS, and IaaS efficiently․ This model has revolutionized IT, providing unparalleled scalability and cost-effectiveness․

Microservices Architecture

Microservices architecture is a design approach that structures an application as a collection of loosely coupled, independently deployable services․ Each service handles a specific business function and can be developed, deployed, and scaled separately․ This modular approach enhances scalability, flexibility, and fault isolation, making it easier to manage complex systems․ Microservices align well with distributed systems, enabling organizations to build resilient, adaptable, and highly available applications․ By breaking down monolithic systems into smaller, focused components, microservices promote innovation and efficiency in modern software development․

Real-Time Systems

Real-time systems are distributed systems that require predictable and timely responses to events․ They are critical in applications like control systems, embedded devices, and live data processing, where delays can lead to failures․ These systems must manage resources efficiently to ensure low latency and high reliability․ By leveraging distributed architectures, real-time systems can handle tasks concurrently across multiple nodes, ensuring consistent performance․ Understanding distributed systems is essential for designing real-time applications that meet strict deadlines and handle priority tasks effectively, making them indispensable in modern computing environments․

Case Studies in Distributed Systems

Blockchain technology exemplifies distributed systems, enabling decentralized, secure transactions across peer-to-peer networks without central control, ensuring fault tolerance and data integrity through consensus algorithms․

Google’s Distributed System

Google’s distributed system is a prime example of large-scale distributed architecture, enabling efficient data processing and storage․ Technologies like MapReduce and Google File System (GFS) handle massive datasets across clusters, ensuring scalability and fault tolerance․

These systems allow Google to manage global data centers seamlessly, providing services like Search and Ads․ Their design emphasizes consistency and reliability, making them a benchmark for distributed system design․

Amazon’s Distributed Systems

Amazon’s distributed systems are cornerstone of its global operations, enabling scalable and highly available services․ Technologies like DynamoDB and S3 exemplify Amazon’s approach to distributed data management․ These systems ensure fault tolerance and consistent performance across millions of requests․ Amazon’s architecture leverages service-oriented design, with microservices operating independently yet cohesively․ This model supports rapid scalability and resilience, making Amazon’s distributed systems a benchmark for modern computing․ Their solutions address real-world challenges, providing reliable infrastructure for diverse applications․

Blockchain Technology

Blockchain technology is a transformative application of distributed systems, enabling decentralized, secure, and transparent transaction processing․ It relies on a peer-to-peer network where each node maintains a copy of the ledger, ensuring data consistency and security․ Blockchain’s consensus mechanisms, like Proof of Work or Proof of Stake, solve the consensus problem, preventing fraud and ensuring trust without intermediaries․ Its immutability and transparency make it ideal for applications requiring high security, such as cryptocurrencies, supply chain management, and smart contracts․ Blockchain exemplifies a paradigm shift in distributed systems, offering solutions to traditional challenges and driving innovation across industries․

Design Patterns for Distributed Systems

Distributed systems require scalable, reliable, and maintainable architectures․ Design patterns like master-slave, peer-to-peer, and service-oriented architectures provide solutions to common challenges, ensuring efficient communication and fault tolerance․

Master-Slave Architecture

The master-slave architecture is a common design pattern in distributed systems where one central node (master) coordinates tasks and data across multiple slave nodes․ This model simplifies task distribution and ensures consistency, as the master handles all decision-making while slaves execute instructions․ It excels in scenarios requiring centralized control, such as distributed databases and file systems, but can introduce bottlenecks if the master becomes a single point of failure․ Load balancing and fault tolerance strategies are often implemented to mitigate these risks․

Peer-to-Peer Architecture

Peer-to-peer (P2P) architecture is a decentralized system where each node acts as both a client and a server․ This design eliminates the need for a central authority, enabling direct resource sharing and communication between nodes․ P2P systems are highly scalable and fault-tolerant, as there’s no single point of failure․ They are commonly used in file-sharing networks and blockchain technology․ However, the lack of central control can lead to challenges in maintaining data consistency and security, requiring robust mechanisms for coordination and trust management․

Load Balancing

Load balancing is a critical technique in distributed systems to distribute workload evenly across multiple servers, ensuring optimal performance and reliability․ It prevents any single server from becoming a bottleneck by redirecting incoming requests based on factors like current load or response time․ This approach improves resource utilization, enhances fault tolerance, and ensures responsiveness․ Load balancing is essential for scalability, particularly in cloud computing and microservices architectures, where dynamic traffic management is crucial for maintaining system efficiency and user satisfaction․

Service-Oriented Architecture

Service-Oriented Architecture (SOA) is a design approach that structures systems as collections of modular, reusable services․ These services communicate using standardized protocols, enabling seamless integration across diverse systems․ SOA promotes loose coupling, scalability, and flexibility, making it ideal for dynamic environments like cloud computing and microservices․ By breaking systems into independent, interoperable components, SOA enhances modularity, reusability, and maintainability․ It is widely adopted in distributed systems to address complex integration challenges and support business agility, aligning IT infrastructure with organizational goals effectively․

Future Trends in Distributed Systems

Distributed systems are evolving toward serverless computing, edge computing, and AI integration, enhancing scalability, efficiency, and real-time processing capabilities for modern applications and services․

Edge Computing

Edge computing represents a significant shift in distributed systems by processing data closer to its source, reducing latency and bandwidth usage․ This approach optimizes performance for real-time applications like IoT devices, autonomous vehicles, and smart grids․ By decentralizing computation, edge computing enhances scalability and reduces reliance on centralized cloud infrastructure․ It enables faster decision-making and improves efficiency in distributed systems, making it a critical trend for future advancements in technology and application development․

Serverless Computing

Serverless computing is a paradigm where the cloud provider manages infrastructure, allowing developers to focus solely on code․ This function-as-a-service model eliminates the need for server management, enabling scalable and cost-efficient solutions․ By abstracting underlying resources, serverless systems automatically adjust capacity to demand, optimizing performance and reducing operational overhead․ This approach is particularly beneficial for event-driven applications, making it a cornerstone of modern distributed systems and a key enabler of efficient, scalable, and maintainable software architectures․

AI in Distributed Systems

AI is transforming distributed systems by enhancing scalability, fault tolerance, and decision-making․ Machine learning algorithms optimize resource allocation, predict system behavior, and detect anomalies in real-time․ AI-driven automation enables self-healing systems, reducing downtime and improving reliability․ Distributed AI models, like federated learning, allow systems to collaborate without central control, preserving data privacy․ As AI advances, it empowers distributed systems to adapt dynamically, ensuring efficient performance and resilience in complex environments, making it a vital component of modern distributed architectures․

Distributed systems are fundamental to modern computing, enabling scalable, reliable, and efficient solutions across various applications․ Their continued evolution will shape the future of technology․

Distributed systems are fundamental to modern computing, enabling scalability, fault tolerance, and efficient resource management․ They represent a shift from monolithic architectures to interconnected components, handling complex tasks like cloud computing and real-time applications․ Understanding these systems requires grasping concepts like the CAP theorem, data replication, and consensus algorithms․ By mastering these principles, developers can build robust, large-scale applications, ensuring reliability and performance in today’s interconnected world․

Final Thoughts

Distributed systems are pivotal in shaping modern computing, offering scalability, fault tolerance, and efficient resource management․ They underpin cloud computing, real-time applications, and global networks, ensuring reliability and performance․ Mastering concepts like the CAP theorem, consensus algorithms, and data replication is essential for developers․ As technology evolves, understanding distributed systems becomes crucial for building robust, scalable applications, driving innovation in software architecture and ensuring systems remain efficient and resilient in an increasingly interconnected world․

Resources

Recommended reading includes books like “Understanding Distributed Systems,” offering deep insights into theoretical and practical concepts․ Online courses provide hands-on learning, ensuring a comprehensive understanding of distributed systems․

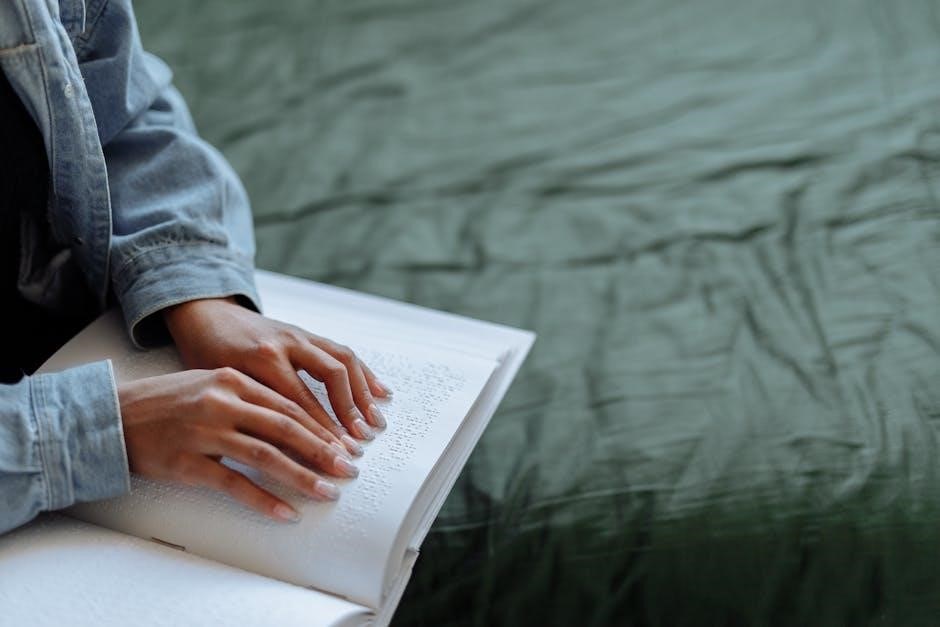

Recommended Reading

Understanding Distributed Systems is a comprehensive guide that bridges the gap between theory and practice․ This book covers fundamental challenges like communication, coordination, and scalability, offering practical insights for designing efficient distributed systems․ It is ideal for both beginners and experienced professionals, providing detailed explanations and real-world examples․ Available as a PDF, it serves as an invaluable resource for anyone aiming to master distributed systems, ensuring a solid foundation for building scalable and fault-tolerant applications․

Online Courses

For a deeper understanding, consider online courses that complement the Understanding Distributed Systems PDF․ Platforms like Coursera and edX offer courses on distributed systems, covering scalability, fault tolerance, and consensus algorithms․ These courses provide hands-on projects and real-world applications, helping learners master the concepts outlined in the guide․ They are ideal for both beginners and professionals seeking to enhance their skills in designing and operating distributed systems effectively․